The evolution of AI-assisted programming has reached a pivotal moment, transforming from simple code completion to truly collaborative AI agents. This shift represents not just an improvement in tooling, but a fundamental change in how software is developed.

But does all this AI coding work?

The short answer is YES. It’s a new era. I suspect many of you don’t think so. But this isn’t a fad. The whole job of what it means to be a Software Engineer is changing. Massively. Robotics that write software are here and now.

But how did we get here?

Other than some folks asking ChatGPT to write some code, it all began with GitHub Copilot in June 2021. It introduced AI-powered code completion that could suggest entire functions. While revolutionary at the time, it was fundamentally reactive - waiting for developers to write code before making suggestions. In February 2023, the Cursor editor launched with the first implementation of true “agent mode” capabilities, demonstrating that AI could maintain context across entire conversations about code. This was followed by Amazon CodeWhisperer’s integration of conversation memory in late 2023, and Anthropic’s Claude extension for VS Code in early 2024, which brought sophisticated project-wide understanding to mainstream IDEs.

What made these early agents different was their ability to maintain context across entire conversations and understand project-wide implications of changes. However, they still struggled with complex architectural decisions and often needed extensive prompting to produce reliable code.

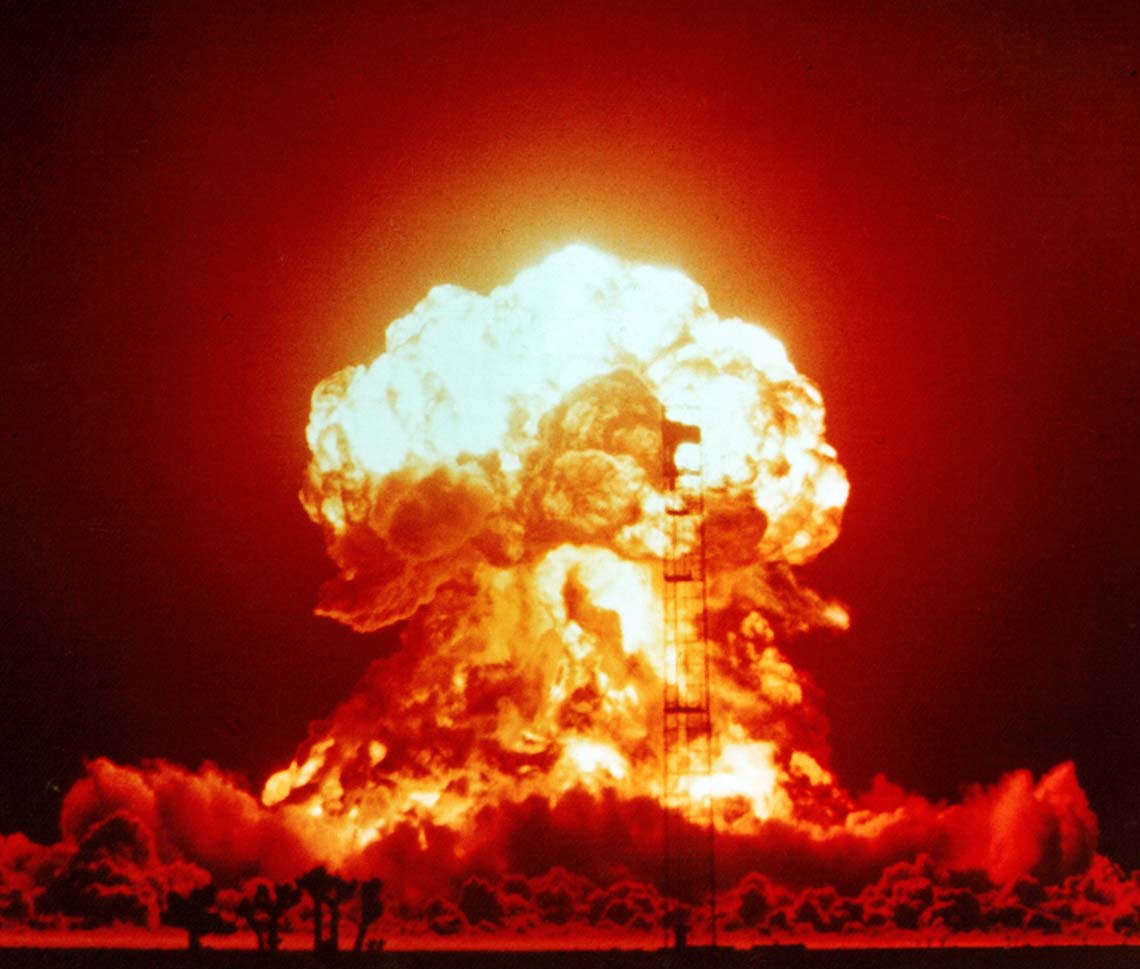

It became an arms race.

The 2024 Breakthrough

The major technical breakthrough in mid-2024 came from advances in LLMs. Multiple folks directly building LLMs are saying that there is a step increase in capability EVERY TWO MONTHS. This isn’t just incremental improvement - it’s entire step changes in capability. EVERY TWO MONTHS.

Think about that. Moore’s Law says we double chip capability is every two years. AI models are doing that in TWO MONTHS.

Agents are Ephemeral Robots

The model is just the brain. If you want it to DO THINGS in the real world (like write code for you, or control DevOps for you) then you need it to become a robot. Yes, a robot.

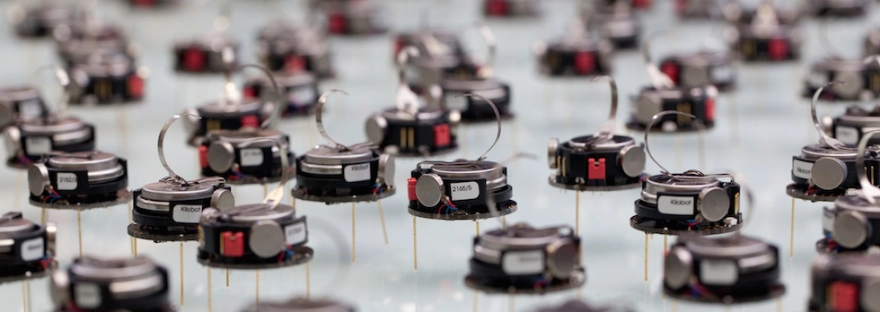

What we are calling AI agents today are basically ephemeral robots. No motors or sensors, but definitely wired to the real world. How do they do that? The Model Context Protocol (MCP). Just SIX MONTHS AGO (yes, 6) Anthorpric released the specification for MCP. Within six months there are HUNDREDS of MCP servers out there. See the official list and the awesome list.

What do you want to control from your AI chat? Slack? Jira? Snowflake? And database you have? Your file system? All of that and much more is there already.

But… how do you get swarms of robots? the Agent-to-Agent (A2A) specification. This was announced by Google TWO WEEKS AGO. Over 50 technology companies publically support and promote this specification already.

Unless you were actually watching, you didn’t see these robots arrive. But they arrived in force in late 2024 and the became a tsunami in early 2025.

And the arms race became nuclear.

What are MCP and A2A?

Model Context Protocol (MCP) enables AI agents to actually DO THINGS. Like go gather data. And write files. Or hit an API to make something happen. And effectively build a workflow. It’s basically a standard software interface to the robot arm or motor.

The Agent-to-Agent (A2A) specification (just released last month) takes this further, enabling multiple specialized agents to collaborate on complex tasks. It claims it can enable:

- One agent might focus on architectural decisions

- Another could specialize in security reviews

- A third might handle test generation

- While others could focus on documentation and maintenance

This is all foundational stuff. I do wonder if MCP will be the new SOAP (credit to Scott Francis for that insight) but time will tell. We’ll adapt, just like we did when REST came out.

So What is Agentic Programming?

Agentic programming is using AI models combined with code editing agents to completely change how software is designed and written. This is radically different from just last winter. Major platforms have embraced agentic programming:

- Cursor Editor

- Visual Studio Code GitHub Copilot integration

- JetBrains

- WindSurfer (formerly Codium)

And then there are the models, which are getting better every day. I’m using Claude 3.5 Sonnet now. By the time that you read this it may be something newer.

In fact, I’m playing with Ollama - a tool to let you run models locally. No remote AI vendor needed. It’s stunning to make it all work on your own computer (mine is admittedly is a really powerful computer - MBP with M4 Pro and 48GB of RAM).

All of these can be basically used to write entire applications nearly autonomously. The Software Engineer interacts with the AI agent providing prompts (instructions). The AI writes code. For crude, limited applications you may not even need to know how to code. That kind of development is called Vide Coding and it already has some serious stories floating around about the resulting security and fraud impacts of turning loose these kinds of apps in the wild.

But my point? it’s not an all or nothing thing. Even if the agent can’t build it all for you automatically, it’s a huge boost in productivity. If you were building a house would you really cut all the wood by hand?

Not me. I’m using a power saw. Who will build the house faster?

My Early Results

I’ve built a few smaller projects, in C, go and typescript. None of them were of significant size. The time savings to at least get a working scaffolding is insane. It’s at least 100x.

Yes. 100x. I can get 100 times the work done in a unit of time. At least for the “scaffolding” work.

The agent requires guidance. You really have to write your specifications clearly. Just like in real products, if you are fuzzy in your ask, it’s likely to become something other than what you intended.

You need to really break things down into small, clear parts. In writing. Doing this is a skill. In fact, I think it’s going to become the core skill in Software Engineering from here out.

But don’t just take my word for it. Andrew Ng is perhaps one of the most impactful AI inventors, founder of DeepLearning and a former professor of CS/AI at Stanford. His online classes are basically table stakes for anyone interested in how all this works. He posted yesterday that “AI-assisted coding, including vibe coding, is making specific programming languages less important, even though learning one is still helpful to make sure you understand the key concepts.”

My experience today is that these tools can write code about as well as a junior developer. For web stuff probably as well as an intermediate developer.

Do I completely trust it? No. Of course not. Do you trust all the code that your junior developers write? I hope not. On my team we still require a second code review and that cannot be done by AI.

Does the agent write the code the way I would? Rarely. In fact, the code I’ve seen it write does not really follow the design patterns that I would use. But why do we use design patterns? We use them for two reasons:

- they are tried and true patterns to solve a problem

- in a way that other humans can grok when looking at the code later

But… for a lot of modules, there’s no real need for a human to grok the code. If you really embrace this and have the AI write your tests first (you do write tests first, right?) and if you review those tests carefully and if the AI-generated code is passing the tests… then do you really care it didn’t use the design pattern you would have?

Because now you can do things like this:

Inspect the code in this repository and write a design document about how it works.

Your audience is an experienced software engineer.

Generate block diagrams, sequence diagrams and state diagrams as needed using mermaid.

Try that. You’ll be amazed.

In future posts I’ll write about how I think this will impact the actual career of Software Engineers.

What’s Next for Me?

Keep testing this! Embrace! Learn what it can and cannot do. Folks, it’s real. It’s not going away. The robot swarm is coming. And I’ll tell you about it as I go.