What exactly is Cloudy DevOps? How do you go from classic data center Enterprise deployments to the cloud? Here’s some of what I’ve learned across a half-dozen teams who’ve done it.

So you have read my blog post about How To Do DevOps. You agree with the principles of aligning the goals of Dev and Ops, have those two teams work together every day, and treating Ops as a software problem. Great principles. But tactically speaking, what do you do to move towards DevOps in the cloud?

It’s All About Maturing

So you have a monolithic, Enterprise application. It’s hosted in your data center. You want to move to the cloud. You want to deploy new features faster. You want to scale up and down with your business without spending huge sums of capital up front. You want even higher availability without paying many multiples more than you are now. You want Cloudy DevOps.

Everyone has to start somewhere. DevOps is iterative, and it’s best done in small steps. You might be lucky enough to be doing a brand-new startup or a green field project and can start “right” but probably not. Most likely you are where you are and you want to “get better.” That’s the norm. It’s like a catapillar becoming a butterfly: it’s all about the maturation process.

You may or may not be doing things in an agile way yet. But that’s really the place to start. The whole approach to getting from where you are to where you want to be is an agile one. Pick scrum, or kanban or some merger of the two (scrumban) but head in that direction. If you are really serious, do it right. Scrum does not work unless you really track your delivered story points to know what your capacity is - and then actually use that to estimate time to feature delivery. If you revert to just pushing teams to deliver against a schedule that you mandate… then you are not doing scrum. Likewise, if you are kanban then you need to actually measure your “work in progress limit” (WIP limit) and honor it. You will need to add some form of either breaking work into similar sized chunks or using a story point approach if you want to predict when things will be done. Setting a schedule for when you will deliver a finished software and not tracking team “feature velocity” is not agile. If you want to do cloud DevOps, fix your agile story first.

The funny thing is that moving to agile gets easier as you break your system down into micro-services and take a cloud-native approach. The more you decouple things the more you can iterate on the parts independently. The more you can make changes independently the easier it gets moving forward. How do you move a giant mountain of dirt? One shovel at a time.

The same approach makes sense as you move towards DevOps. It’s a process. Do a little bit, then do a little bit more. Get better each time.

The Cloud Journey

The first step into cloud for most teams is the infamous “lift and shift” of existing software onto VM’s in some form of cloud. It may be in your own datacenters on VMWare VM’s even, but that’s a start. It moves you in the direction of virtualization. This is a good thing, but it’s only the first baby step towards cloud. Don’t get me wrong, it’s still a useful step. Many programs who want to go to the cloud and a lift and shift is the only way they can realistically get started.

I call this Cloud 1.0. If you are using a public cloud you accomplish a huge business value of moving your infrastructure from a CapEx problem to an OpEx problem. You don’t have to long-range capital planning for datacenter expansion because you can assume the cloud provider has virtually infinite capacity. You do have to plan, since your finance folks will want to project costs, of course. But it’s an operating expense now (OpEx) which essentially comes out of a different budget - one that in my experience is a lot easier to get. Large capital expenditures seem to always involve a lot more planning and approval. Once you lift and shift onto a cloud platform you can incrementally scale your solution and you are at least in the right environment. But Cloud 1.0 is really just the beginning.

Cloud 2.0 is when you really start to embrace changes that will resonate into how to actually develop and deliver software. This is perhaps the biggest change you will face because it’s a departure from everything you are used to. And that’s a bit deal. You may not have the skills you need. My experience is that some Dev teams know only development. This is much more common in java shops it seems. The things I am about to discuss definitely require linux skills. If your teams don’t have those skills, you may need some training. Don’t discount this. Lack of linux skills definitely slowed down several DevOps efforts I’ve been a part of.

Real Cloud Makes Everything Different - No REALLY

That’s worth saying again. Cloud 2.0 is DIFFERENT. There are two reasons underlying this but they have far reaching implications on your processes.

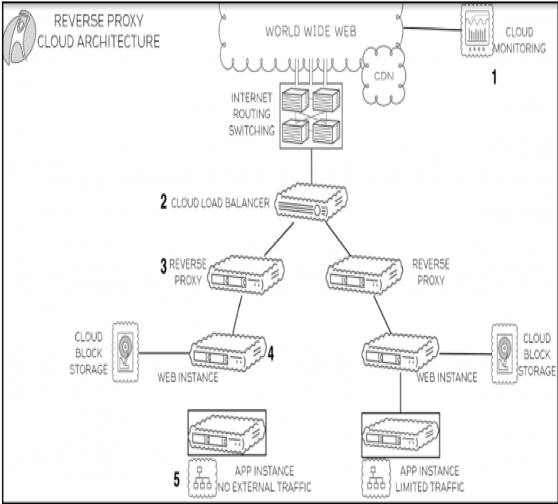

The obvious first change is “infrastructure as code.” This can really be any tool you want, really, if it translates to the creation of some combination of networking, compute, and storage that is created from running code. This can be terraform, ansible, chef, salt, puppet, python using bodo, or whatever.

Now there’s a hidden secret here. The ASSUMPTION is that if you have “infra as code” that you also have deployment automation. That may include the same tools, or not. For really simple things you may bake the whole application onto VM images. I don’t recommend that for anything non-trivial because it quickly becomes hell to manage those images (build, test, store, update, delete). So if you deploy all your infra automatically and you deploy your software and it’s configuration automatically then you can run some code and in a little while you have an instance of your whole software application running. The benefits are many, but they include:

- repeatability - you get exactly the same setup every time you run the code

- source control - put that code under source control (like git) and manage it like code

- inspectability - unlike GUI operations, you can have a second set of eyes review it

- sharability - you can share the code between teams, building on others successes

- recoverability - you can rebuild identical infra somewhere else if a catastrophe happens

You need a good Disaster Recovery (DR) plan anyway, and if you are this point then you can put something in place that actually works. You can choose to use the cloud provider’s proprietary solution or use something open source, but the point is just to DO IT. I highly recommend terraform for this though. While your scripts do end up being specific to the cloud vendor, but at least it’s readable code. I draw distinction here between cloud infra orchestration systems (like terraform or AWS Cloud Formation or Azure Resource Manager) and Configuration Management solutions like chef, puppet or ansible. If you are not already familiar with all these I recommend you stop now and go read up on them.

A typical scenario uses terraform to create the infra and then either chef, puppet, ansible or salt to deploy and configure the software. The details on what is installed on a given host is usually specified as part of the cloud init process. This is somewhat specific to the cloud vendor. AWS does it this way and Azure does it a similar way. Terraform abstracts that away to be mostly common but you should really learn how. And there are gotchas, according to some. The point is that you should avoid creating custom VM images because managing them becomes massively painful, and when you need to use them you are copying or moving hundreds of gigabyte images over the cloud network. Nothing happens fast that way. If you use terraform to boot a standard image and pass in the right cloud init data to trigger your configuration management then you can automatically deploy everything.

I cannot emphasize this enough. Deployment automation is the first and most important part of doing real cloud. You will be tempted to get close to full automation and some small part of another will be difficult and you will think “that’s OK, I’ll just do that part manually.” Don’t do it. Don’t let that little devil on your shoulder tempt you that way.

Finish the job. Be able to deploy your whole solution by running a script. If your solution deploys it’s own database then you also have to run the scripts to at least create your schemas, or to recover a database backup that has a known set of data or a recent backup of your data. This is the holy grail: to be able to deploy your whole software solution to the cloud by running a script and passing it some data (like which database backup to use, for example). When you get to this point, you can celebrate. You have hit a milestone in Cloudy DevOps.

But you are not done. Even more important than the code to create and deploy the infrastructure and software is the code to DELETE the infrastructure.

The important concept here is DISPOSABILITY. If you can create it from code, you can delete it from code. Poof! It’s gone. This should be part and parcel of what you did to test your creation scripts. Create. Test and verify. Destroy. Repeat and ensure it’s the same, every time.

The ability to destroy the resources is even more important than the ability to create it. Stop for a minute and think. Why is that more important? There’s two big reasons. The smaller reason is money. I’ve seen teams create lots and lots of infrastructure and then not turn it off and the next thing you know you are spending $300K per quarter and the finance people get really unhappy. Build the discipline to create and then destroy it after you’ve used it. But the bigger reason is the workflow that gets enabled out of dynamic creation and destruction of infrastructure.

This changes everything. You now completely decouple Engineers working on one part of your solution from dependencies on Engineers working on other parts. Team A can deploy version 1 with some changes they are working on and they can develop and test to their hearts content - and then destroy the whole thing a few hours later. For less money than a few pizzas cost your team can make huge progress on things that previously dependent on other teams. Not only that, your testing/QA efforts can proceed independently. Want to do regression testing against a specific version of code and a specific version of your database? Deploy that combination and test, and turn it all off by dinner.

Seriously. This is the single most important step you can take. Well, other than actually having automated tests. You do have those right? That’s just good software practice and not specific to Cloudy DevOps, but you do need it to really make the next step sing. If you can automatically instantiate cloud servers and deploy and configure your software, and you can automatically run tests against it, then you have the logo blocks you need to start the next stage of the cloud journey. You can start to think about Continuous Integration and Continuous Delivery (CI/CD). You have the blocks in place to really start taking your software apart and breaking it into components, reworking it towards micro-services. You can really start thinking about containers (if you didn’t already start there up above).

Create, test, destroy. Sounds almost biblical. But it’s the foundation for Cloudy DevOps. It’s not easy to get there if your starting point is old-school Enterprise software development. But you don’t need to boil the ocean. You can make incremental progress. It reminds me of the admittedly apocryphal story of what Michelangelo said when he was asked how he sculpted his famous statue David. He supposedly said “well, I carved away everything that didn’t look like David.” You can do the same thing with DevOps. Stop doing all the things that don’t look like DevOps.

Next up: talking about Ops and how your mind-set needs to change to start to really make DevOps work for you. Stay tuned!